- Global study released on the role of AI to detect and manage distress

- 1 in 20 users trigger SOS mode every year

- 82% of crisis incidents are AI detected

Data released today from Wysa’s AI mental health platform reveals the crucial new role that AI plays in mental health crisis mitigation. Wysa studied anonymous data from 19,000 users across 99 countries to understand instances of SOS and user behaviour after triggering the escalation pathway feature. The research follows the company reaching a milestone of 400 Wysa users publicly stating that the app saved their life.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20240415230248/en/

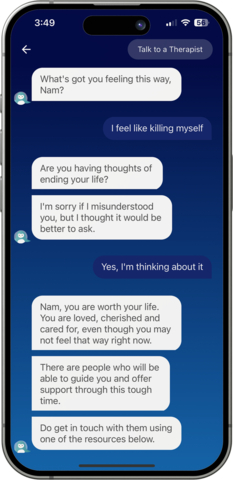

SOS feature activation on Wysa by conversational AI detection. AI detects 82% of mental health app users in crisis through conversation. (Graphic: Business Wire)

The global study found that 1 in 20 Wysa users (5.2%) reported crisis instances on the app in one year. 82% of these instances were detected by Wysa’s AI and confirmed by the user that they were having thoughts of self harm or suicide. This confirmation escalated the user to Wysa's SOS interventions. The other 18% of crisis instances were self-selected by users, who were then able to access the best practice crisis resources on the app such as helplines, a safety plan and exercises that could help with grounding.

When a crisis instance was confirmed, just 2.4% of people chose to call helplines when repeatedly encouraged to do so by the app. This indicates that even in moments of distress, few people feel ready to reach out for professional help, highlighting the benefit of alternative means of support in times of crisis.

The personal safety plan, co-created on the app between Wysa and users, containing vital contacts, reasons to live, calming activities, and warning signs, emerged as the most utilised SOS resource. Over 49.2% of users in crises selected this feature, underlining its effectiveness in fostering resilience and self-reflection.

Grounding exercises were used by 46.6% of users in crises. These exercises, which include breathing exercises and mindfulness techniques, help users refocus on the present, increasing their awareness and helping them put their minds at ease.

One-third of crisis incidents occurred during the working day. Following the end of the typical workday, SOS triggers remained high, with 31% occurring between 6 pm and 12 am, and 28.1% post-midnight. The least usage of the SOS feature, at just 5%, was recorded in the morning, between 7 am and 9 am - before work has started. The data shows that a sense of hopelessness and worthlessness is a key determinant of whether someone reaches out for help (in 59% of cases), and this rises during the day.

Jo Aggarwal, CEO and founder of Wysa, said: “This research sheds light on the role AI can play in mitigating mental health crises. It's evident that AI serves a unique purpose by providing a psychological safe space for people to express concerns. For many individuals, AI conversations serve as a crucial support when they're not comfortable discussing their worries with another person.”

Wysa is dedicated to upholding the highest standards in data protection, privacy, and clinical safety in all operational aspects, especially in managing crises related to self-harm or suicidal ideation among users. Its support interventions, conversations, and clinical flows are meticulously managed by experienced clinicians and psychologists.

One user said: “Wysa sets a safety plan and helps you cope. This app made me feel better in a matter of three minutes. It made sure to check in, it was helpful, and it helped me calm down more and sleep better.”

Read the full report at https://www.wysa.com/role-of-ai-in-sos

View source version on businesswire.com: https://www.businesswire.com/news/home/20240415230248/en/

Contacts

Alexa Gutwenger

Alexa.Gutwenger@diffusionpr.com